PyTorch中的ADAM优化器示例

介绍

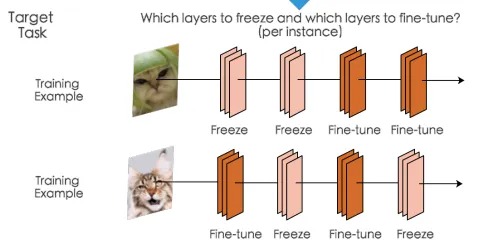

在微调模型并希望冻结某些层的情况下,冻结一些参数通常很有用,具体取决于你处理的示例,如下所示:

如我们所见,对于第一个示例,我们冻结前两层,并更新最后两层的参数,而对于第二个示例,我们冻结第二层和第四层,并微调其他层。当这种技术有用时,还会有许多其他情况,如果你正在阅读这篇文章,那么你可能有一个这样的情况。

问题设置

为了简化问题,让我们假设我们有一个接受两种不同类型输入的模型,一种具有3个特征,另一种具有2个特征,根据传递的输入类型,我们将它们分别通过两个不同的初始层。因此,我们只想在训练期间更新与这些特定输入相关的参数。如下所示,当传递input1时,我们希望冻结hidden_task1层,当传递input2时,我们希望冻结hidden_task2层。

class Network(nn.Module): def __init__(self): super().__init__() # Inputs to hidden layer linear transformation self.hidden_task1 = nn.Linear(3, 3, bias=False) self.hidden_task2 = nn.Linear(2, 3, bias=False) self.output = nn.Linear(3, 4, bias=False) # Define sigmoid activation and softmax output self.sigmoid = nn.Sigmoid() self.softmax = nn.Softmax(dim=1) def forward(self, x, task='task1'): if task == 'task1': x = self.hidden_task1(x) else: x = self.hidden_task2(x) x = self.sigmoid(x) x = self.output(x) x = self.softmax(x) return x def freeze_params(self, params_str): for n, p in self.named_parameters(): if n in params_str: p.grad = None def freeze_params_grad(self, params_str): for n, p in self.named_parameters(): if n in params_str: p.requires_grad = False def unfreeze_params_grad(self, params_str): for n, p in self.named_parameters(): if n in params_str: p.requires_grad = True# define input and target input1 = torch.randn(10, 3).to(device)input2 = torch.randn(10, 2).to(device)target1 = torch.randint(0, 4, (10, )).long().to(device) target2 = torch.randint(0, 4, (10, )).long().to(device) net = Network().to(device)# helper def changed_parameters(initial, final): for n, p in initial.items(): if not torch.allclose(p, final[n]): print("Changed : ", n)在仅有SGD优化器的世界中

如果我们只使用SGD优化器,问题将简单地通过requires_grad = False来解决,这样就不会计算我们指定的参数的梯度,从而得到我们想要的结果。

original_param = {n : p.clone() for (n, p) in net.named_parameters()}print("Original params ")pprint(original_param)print(100 * "=")# let's define 2 loss functions (we could only define one actually # in this case as they are the same) criterion1 = nn.CrossEntropyLoss()criterion2 = nn.CrossEntropyLoss()optimizer = optim.SGD(net.parameters(), lr=0.9)# set requires_grad to False for selected layersnet.freeze_params_grad(['hidden_task2.weight'])print("Params after task 1 update ")params_hid1 = {n : p.clone() for (n, p) in net.named_parameters()}pprint(params_hid1)print(100 * "=")# output for task 1 - we want to keep frozen task2 layer parametersoutput = net(input1, task='task1')optimizer.zero_grad() # zero the gradient buffersloss1 = criterion(output, target)loss1.backward()optimizer.step()print("States optimizer 1: ")print(optimizer.state)# set requires_grad back to True for selected layersnet.unfreeze_params_grad(['hidden_task2.weight'])# output for task 2 - we want to keep frozen task1 layer parametersoutput1 = net(input2, task='task2')optimizer.zero_grad() # zero the gradient buffersloss2 = criterion1(output1, target1)loss2.backward()optimizer.step() # Does the updateprint("States optimizer 1: ")print(optimizer.state)# set requires_grad back to True for selected layersnet.unfreeze_params_grad(['hidden_task1.weight'])print("Params after task 2 update ")params_hid2 = {n : p.clone() for (n, p) in net.named_parameters()}pprint(params_hid2)changed_parameters(params_hid1, params_hid2)在下面的输出中,我们可以看到任务1和任务2更新后的“Changed”参数是正确的,并且我们实现了预期的结果。

{'hidden_task1.weight': tensor([[-0.0043, 0.3097, -0.4752], [-0.4249, -0.2224, 0.1548], [-0.0114, 0.4578, -0.0512]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.0214, 0.2282, 0.3464], [-0.3914, -0.2514, 0.2097], [ 0.4794, -0.1188, 0.4320], [-0.0931, 0.0611, 0.5228]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================隐藏层参数更新后的参数 {'hidden_task1.weight': tensor([[ 0.0010, 0.3107, -0.4746], [-0.4289, -0.2261, 0.1547], [-0.0105, 0.4596, -0.0528]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.0554, 0.2788, 0.3800], [-0.4105, -0.2702, 0.1917], [ 0.4552, -0.1496, 0.4091], [-0.0838, 0.0601, 0.5301]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================Changed : hidden_task1.weightChanged : output.weight隐藏层参数更新后的参数 1 {'hidden_task1.weight': tensor([[ 0.0010, 0.3107, -0.4746], [-0.4289, -0.2261, 0.1547], [-0.0105, 0.4596, -0.0528]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1906, -0.2102], [-0.1412, -0.6783], [-0.4657, -0.2929]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.0386, 0.2673, 0.3726], [-0.3818, -0.2414, 0.2232], [ 0.4402, -0.1698, 0.3898], [-0.0807, 0.0631, 0.5254]], device='cuda:0', grad_fn=<CloneBackward0>)}Changed : hidden_task2.weightChanged : output.weight自适应优化器的复杂性

现在让我们再次运行相同的代码,但使用Adam优化器:

optimizer = optim.Adam(net.parameters(), lr=0.9)在“Changed”部分,我们现在看到在第二个任务更新后,hidden_task1.weight也发生了变化,这不是我们想要的。

原始参数 {'hidden_task1.weight': tensor([[-0.0043, 0.3097, -0.4752], [-0.4249, -0.2224, 0.1548], [-0.0114, 0.4578, -0.0512]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.0214, 0.2282, 0.3464], [-0.3914, -0.2514, 0.2097], [ 0.4794, -0.1188, 0.4320], [-0.0931, 0.0611, 0.5228]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================隐藏层参数更新后的参数 {'hidden_task1.weight': tensor([[ 0.8957, 1.2069, 0.4291], [-1.3211, -1.1204, -0.7465], [ 0.8887, 1.3537, -0.9508]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.9212, 1.1262, 1.2433], [-1.2879, -1.1492, -0.6922], [-0.4249, -1.0177, -0.4718], [ 0.8078, -0.8394, 1.4181]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================Changed : hidden_task1.weightChanged : output.weight隐藏层参数更新后的参数 1 {'hidden_task1.weight': tensor([[ 1.4907, 1.7991, 1.0283], [-1.9122, -1.7133, -1.3428], [ 1.4837, 1.9445, -1.5453]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[-0.7146, -1.1118], [-1.0377, 0.2305], [-1.3641, -1.1889]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.9372, 1.3922, 1.5032], [-1.5886, -1.4844, -0.9789], [-0.8855, -1.5812, -1.0326], [ 1.6785, -0.2048, 2.3004]], device='cuda:0', grad_fn=<CloneBackward0>)}Changed : hidden_task1.weightChanged : hidden_task2.weightChanged : output.weight让我们试着理解这里发生了什么。SGD的更新规则定义如下:

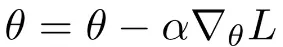

在这里,alpha是学习率,nabla L是相对于参数的梯度。正如我们所看到的,如果梯度为零,参数将不会被更新,因为更新规则只是一个梯度的函数。当我们将requires_grad = False设置为False时,这些层的梯度将为零,不会被计算。

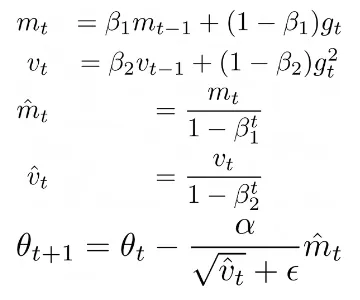

那么自适应优化器,如ADAM或其他优化器,其更新规则不仅仅是梯度的函数呢?让我们看看ADAM:

在这里,Beta1,Beta2是一些超参数,alpha是学习率,mt是梯度gt的第一时刻,vt是梯度gt的第二时刻。这个更新规则允许为每个参数计算自适应学习率。最重要的是,对于我们的问题,即使当前梯度gt通过requires_grad = False设置为零,优化器仍然使用存储的mt和vt值来更新参数。确实,如果我们打印optimizer.state,我们可以看到优化器存储了每个参数的步骤数(即每个参数的梯度更新次数),exp_avg是第一时刻,exp_avg_sq是第二时刻:

# optimizer step 1defaultdict(<class 'dict'>, {Parameter containing:tensor([[ 0.8957, 1.2069, 0.4291], [-1.3211, -1.1204, -0.7465], [ 0.8887, 1.3537, -0.9508]], device='cuda:0', requires_grad=True): {'step': tensor(1.), 'exp_avg': tensor([[-5.9304e-04, -1.0966e-04, -5.9985e-05], [ 4.4068e-04, 4.1636e-04, 1.7705e-05], [-1.0544e-04, -2.0357e-04, 1.7783e-04]], device='cuda:0'), 'exp_avg_sq': tensor([[3.5170e-08, 1.2025e-09, 3.5982e-10], [1.9420e-08, 1.7336e-08, 3.1345e-11], [1.1118e-09, 4.1440e-09, 3.1623e-09]], device='cuda:0')}, Parameter containing:tensor([[ 0.9212, 1.1262, 1.2433], [-1.2879, -1.1492, -0.6922], [-0.4249, -1.0177, -0.4718], [ 0.8078, -0.8394, 1.4181]], device='cuda:0', requires_grad=True): {'step': tensor(1.), 'exp_avg': tensor([[-0.0038, -0.0056, -0.0037], [ 0.0021, 0.0021, 0.0020], [ 0.0027, 0.0034, 0.0025], [-0.0010, 0.0001, -0.0008]], device='cuda:0'), 'exp_avg_sq': tensor([[1.4261e-06, 3.1517e-06, 1.3953e-06], [4.4782e-07, 4.3352e-07, 3.9994e-07], [7.2213e-07, 1.1702e-06, 6.4754e-07], [1.0547e-07, 1.2353e-09, 6.5470e-08]], device='cuda:0')}})# optimizer step 2tensor([[ 1.4907, 1.7991, 1.0283], [-1.9122, -1.7133, -1.3428], [ 1.4837, 1.9445, -1.5453]], device='cuda:0', requires_grad=True): {'step': tensor(2.), 'exp_avg': tensor([[-5.3374e-04, -9.8693e-05, -5.3987e-05], [ 3.9661e-04, 3.7472e-04, 1.5934e-05], [-9.4899e-05, -1.8321e-04, 1.6005e-04]], device='cuda:0'), 'exp_avg_sq': tensor([[3.5135e-08, 1.2013e-09, 3.5946e-10], [1.9400e-08, 1.7318e-08, 3.1314e-11], [1.1107e-09, 4.1398e-09, 3.1592e-09]], device='cuda:0')}, Parameter containing:tensor([[ 0.9372, 1.3922, 1.5032], [-1.5886, -1.4844, -0.9789], [-0.8855, -1.5812, -1.0326], [ 1.6785, -0.2048, 2.3004]], device='cuda:0', requires_grad=True): {'step': tensor(2.), 'exp_avg': tensor([[-0.0002, -0.0025, -0.0017], [ 0.0011, 0.0011, 0.0010], [ 0.0019, 0.0029, 0.0021], [-0.0028, -0.0015, -0.0014]], device='cuda:0'), 'exp_avg_sq': tensor([[2.4608e-06, 3.7819e-06, 1.6833e-06], [5.1839e-07, 4.8712e-07, 4.7173e-07], [7.4856e-07, 1.1713e-06, 6.4888e-07], [4.4950e-07, 2.6660e-07, 1.1588e-07]], device='cuda:0')}, Parameter containing:tensor([[-0.7146, -1.1118], [-1.0377, 0.2305], [-1.3641, -1.1889]], device='cuda:0', requires_grad=True): {'step': tensor(1.), 'exp_avg': tensor([[ 0.0009, 0.0011], [ 0.0045, -0.0002], [ 0.0003, 0.0012]], device='cuda:0'), 'exp_avg_sq': tensor([[8.7413e-08, 1.3188e-07], [1.9946e-06, 4.3840e-09], [8.1403e-09, 1.3691e-07]], device='cuda:0')}})我们可以看到,在第一次optimizer.step()更新中,优化器状态中只有两个参数:hidden_task1和output。在第二次优化器步骤中,我们有所有的参数,但注意到hidden_task1被更新了两次,这是不应该的。

那么该如何处理呢?解决方案实际上非常简单 – 不再使用requires_grad设置,而是将参数的grad设置为None。代码如下:

original_param = {n : p.clone() for (n, p) in net.named_parameters()}print("原始参数 ")pprint(original_param)print(100 * "=")# 定义两个损失函数(实际上我们只需要定义一个,因为它们是相同的)criterion1 = nn.CrossEntropyLoss()criterion2 = nn.CrossEntropyLoss()optimizer = optim.SGD(net.parameters(), lr=0.9)print("任务1更新后的参数 ")params_hid1 = {n : p.clone() for (n, p) in net.named_parameters()}pprint(params_hid1)print(100 * "=")# 任务1的输出 - 我们希望保持冻结的任务2层参数output = net(input1, task='task1')optimizer.zero_grad() # 清空梯度缓存loss1 = criterion1(output, target1)loss1.backward()# 在此处冻结参数!net.freeze_params(['hidden_task2.weight'])optimizer.step()# 任务2的输出 - 我们希望保持冻结的任务1层参数output = net(input2, task='task2')optimizer.zero_grad() # 清空梯度缓存loss2 = criterion2(output, target2)loss2.backward()# 在此处冻结参数!net.freeze_params_grad(['hidden_task1.weight'])optimizer.step() # 进行更新print("任务2更新后的参数 ")params_hid2 = {n : p.clone() for (n, p) in net.named_parameters()}pprint(params_hid2)changed_parameters(params_hid1, params_hid2)注意,我们需要在

loss.backward()之后将grad设置为None,因为我们需要首先计算所有参数的梯度,然后再执行optimizer.step()。

如果现在运行这段代码,ADAM优化器的结果如预期所示。

原始参数 {'hidden_task1.weight': tensor([[-0.0043, 0.3097, -0.4752], [-0.4249, -0.2224, 0.1548], [-0.0114, 0.4578, -0.0512]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.0214, 0.2282, 0.3464], [-0.3914, -0.2514, 0.2097], [ 0.4794, -0.1188, 0.4320], [-0.0931, 0.0611, 0.5228]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================任务1更新后的参数 {'hidden_task1.weight': tensor([[ 0.8957, 1.2069, 0.4291], [-1.3211, -1.1204, -0.7465], [ 0.8887, 1.3537, -0.9508]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[ 0.1871, -0.2137], [-0.1390, -0.6755], [-0.4683, -0.2915]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.9212, 1.1262, 1.2433], [-1.2879, -1.1492, -0.6922], [-0.4249, -1.0177, -0.4718], [ 0.8078, -0.8394, 1.4181]], device='cuda:0', grad_fn=<CloneBackward0>)}====================================================================================================变动的参数 : hidden_task1.weight变动的参数 : output.weight任务2更新后的参数 {'hidden_task1.weight': tensor([[ 0.8957, 1.2069, 0.4291], [-1.3211, -1.1204, -0.7465], [ 0.8887, 1.3537, -0.9508]], device='cuda:0', grad_fn=<CloneBackward0>), 'hidden_task2.weight': tensor([[-0.7146, -1.1118], [-1.0377, 0.2305], [-1.3641, -1.1889]], device='cuda:0', grad_fn=<CloneBackward0>), 'output.weight': tensor([[ 0.9372, 1.3922, 1.5032], [-1.5886, -1.4844, -0.9789], [-0.8855, -1.5812, -1.0326], [ 1.6785, -0.2048, 2.3004]], device='cuda:0', grad_fn=<CloneBackward0>)}变动的参数 : hidden_task2.weight变动的参数 : output.weight此外,optimizer.state现在是不同的 – 在第二次优化器的步骤中,hidden_task1没有被更新,它的step值为1。

tensor([[ 0.8957, 1.2069, 0.4291], [-1.3211, -1.1204, -0.7465], [ 0.8887, 1.3537, -0.9508]], device='cuda:0', requires_grad=True): {'step': tensor(1.), 'exp_avg': tensor([[-5.9304e-04, -1.0966e-04, -5.9985e-05], [ 4.4068e-04, 4.1636e-04, 1.7705e-05], [-1.0544e-04, -2.0357e-04, 1.7783e-04]], device='cuda:0'), 'exp_avg_sq': tensor([[3.5170e-08, 1.2025e-09, 3.5982e-10], [1.9420e-08, 1.7336e-08, 3.1345e-11], [1.1118e-09, 4.1440e-09, 3.1623e-09]], device='cuda:0')}, Parameter containing:tensor([[ 0.9372, 1.3922, 1.5032], [-1.5886, -1.4844, -0.9789], [-0.8855, -1.5812, -1.0326], [ 1.6785, -0.2048, 2.3004]], device='cuda:0', requires_grad=True): {'step': tensor(2.), 'exp_avg': tensor([[-0.0002, -0.0025, -0.0017], [ 0.0011, 0.0011, 0.0010], [ 0.0019, 0.0029, 0.0021], [-0.0028, -0.0015, -0.0014]], device='cuda:0'), 'exp_avg_sq': tensor([[2.4608e-06, 3.7819e-06, 1.6833e-06], [5.1839e-07, 4.8712e-07, 4.7173e-07], [7.4856e-07, 1.1713e-06, 6.4888e-07], [4.4950e-07, 2.6660e-07, 1.1588e-07]], device='cuda:0')}, Parameter containing:tensor([[-0.7146, -1.1118], [-1.0377, 0.2305], [-1.3641, -1.1889]], device='cuda:0', requires_grad=True): {'step': tensor(1.), 'exp_avg': tensor([[ 0.0009, 0.0011], [ 0.0045, -0.0002], [ 0.0003, 0.0012]], device='cuda:0'), 'exp_avg_sq': tensor([[8.7413e-08, 1.3188e-07], [1.9946e-06, 4.3840e-09], [8.1403e-09, 1.3691e-07]], device='cuda:0')}})分布式数据并行

另外需要注意的是,如果我们想要在PyTorch中支持多个GPU使用DistributedDataParallel,我们需要稍微修改上面描述的实现,如下所示:

这个实现稍微复杂一些,如果你知道更简洁的写法,请在评论中分享!

反馈

我对以上内容的任何反馈将不胜感激 – 如果您了解是否可能存在任何潜在问题以及是否有其他实现相同功能的方法,请告知。

结论

在本文中,我们描述了在训练过程中如何冻结和解冻一些层的方法。如果您希望在整个训练过程中完全冻结某些层,您可以使用本文中描述的两种解决方案,因为无论您使用SGD还是自适应优化器,在这种情况下都没有关系。然而,正如我们所看到的,当您需要在训练过程中冻结和解冻层时,使用仅依赖于梯度的优化器和使用动量等其他变量的优化器的不同行为是存在问题的。您也可以在此处找到完整的代码。